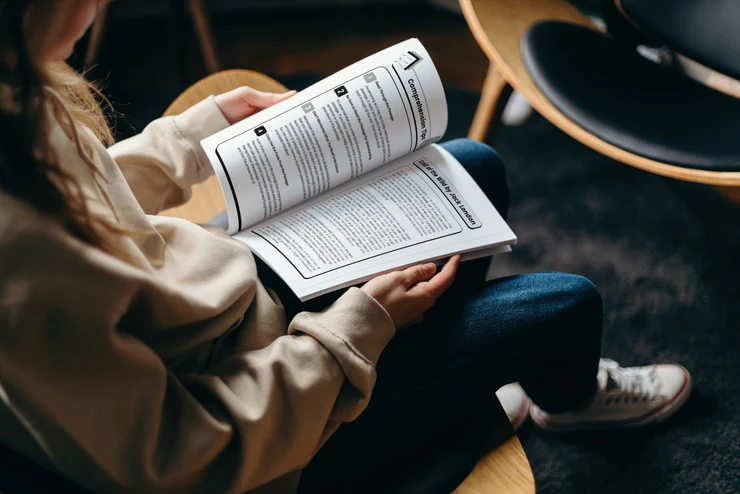

Agentic artificial intelligence (AI) seems like an enterprise’s ideal. Most AI requires constant oversight and guidance, while agentic versions are autonomous and self-motivated. Theoretically, the adaptability should reduce burdens on workers. However, it may need more attention than ever as new security threats take advantage of these independent decision-makers.

What Threats Does Agentic AI Pose?

Just as generative AI introduced novel cyberattack types, so will agentic AI. Threat actors want to manipulate the freedom these models have to execute new kinds of data breaches. This makes autonomous cyberattacks the most threatening outcome.

Prompt injection is one method threat actors can use. This is when hackers force agentic AI to submit malicious inputs that appear normal. These could encourage AI to generate malware or distribute phishing attempts faster than a human could.

Data poisoning is another concern, primarily as agentic AI could work among multiple datasets. A hacker could quickly disrupt well-curated, ethical training. The now-poisoned information trains the AI to contradict compliance, leading to harmful activity on a large scale. Case studies show poisoning 0.01% of a massive dataset would only cost $60, proving how accessible this is to criminal organizations.

Smart malware, which can be a part of any attack strategy, works well with AI tools. In agentic AI, it can tactfully avoid being found as the machine learning algorithms learn. Evading discovery is its strategy, and it uses agentic AI’s biggest strength — dynamic operating behaviors.

How Can Businesses Stay Safe?

Staying safe against these threats will protect data governance efforts. What actions can companies take to strengthen defenses against agentic AI?

Robust Encryption

Data leakage is a high risk with any AI, especially with these models. Hackers can prompt them to release unprotected information with a few clicks. The need for strong encryption has never been higher because of this increased chance of exposure. It will protect against attacks like double extortion ransomware and social engineering by protecting employees’ privacy.

Continuous AI Monitoring

Most agentic AIs work without human intervention. This makes identifying an oncoming or current threat more challenging. They operate independently, driven by their decisions. It can be a breath of fresh air in the tech world to trust an asset and leave it unmonitored to execute tasks.

However, teams must avoid this with agentic AI. Oversight is just as, if not more, crucial. Early detection, isolation and remediations only occur with monitoring tools and automation to alert teams of potential breaches. Monitoring strategies can include:

- Anomaly detection

- Explainable AI

- Advanced authentication

- Data cleaning

Segmentation

Other AI varieties operate within specific parameters, and their datasets are fixed. Agentic AI goes between multiple network areas and can pull from several information sources. Therefore, they travel over a greater surface area, which leaves them more vulnerable. The expanded attack surface demands segmentation, especially if deploying multiple agentic AI.

Companies can isolate agentic AI to the places where it provides the greatest benefit, although this may limit its functionality. The goal is to prevent hackers from moving laterally in networks if they find a backdoor.

Compliance and Governance Adherence

Numerous regulations offer support on how to establish foundational protections against AI attack variants, while agentic-specific insights are forthcoming as they become mainstream.

Teams should still refer to pivotal frameworks, like NIST, ISO and the GDPR, to establish defenses. Other entities, such as the Department of Defense, also have comprehensive rules on protecting unclassified information contractors handle. Additionally, business leaders should encourage analysts to regularly review AI compliance, as the self-learning nature of these tools could cause them to eventually deviate from these rules.

Generative AI is accessible to anyone in a workplace. When employees use it without permission, known as shadow AI, it threatens the sanctity of managed agentic AI tools. Any staffer could unintentionally submit a malicious prompt and cause a breach. Corporations must institute strict permissions to prevent unmonitored AI usage that could compromise governance.

Having Agency Over Agentic AI Attacks

Cybercriminals see opportunities in AI every time experts innovate. Companies and analysts must be several steps ahead of them. Engineers, data scientists, IT professionals and other colleagues are responsible for designing well-defended workplaces for AI to navigate. Additionally, the models need measures to withstand incoming threats. In a world of never-ending technological creativity, the defenders need greater resourcefulness.